From Foundation to Frontier

How Business Communication Can Drive AI Innovation

This post summarizes key arguments from my keynote presentation at the 90th Annual Association for Business Communication Conference in Long Beach, California. The talk examined how business and professional communication expertise positions our field to lead rather than follow AI innovation.

Foundation to Frontier | Slideshow

Our field is already focused where it matters most: 43% of recent Business and Professional Communication research addresses the R&D opportunity zone—collaborative AI applications where workers want partnership, not automation. The next step is claiming leadership: translating this foundation into workplace partnerships where we research and co-design the collaborative AI systems workers actually want.

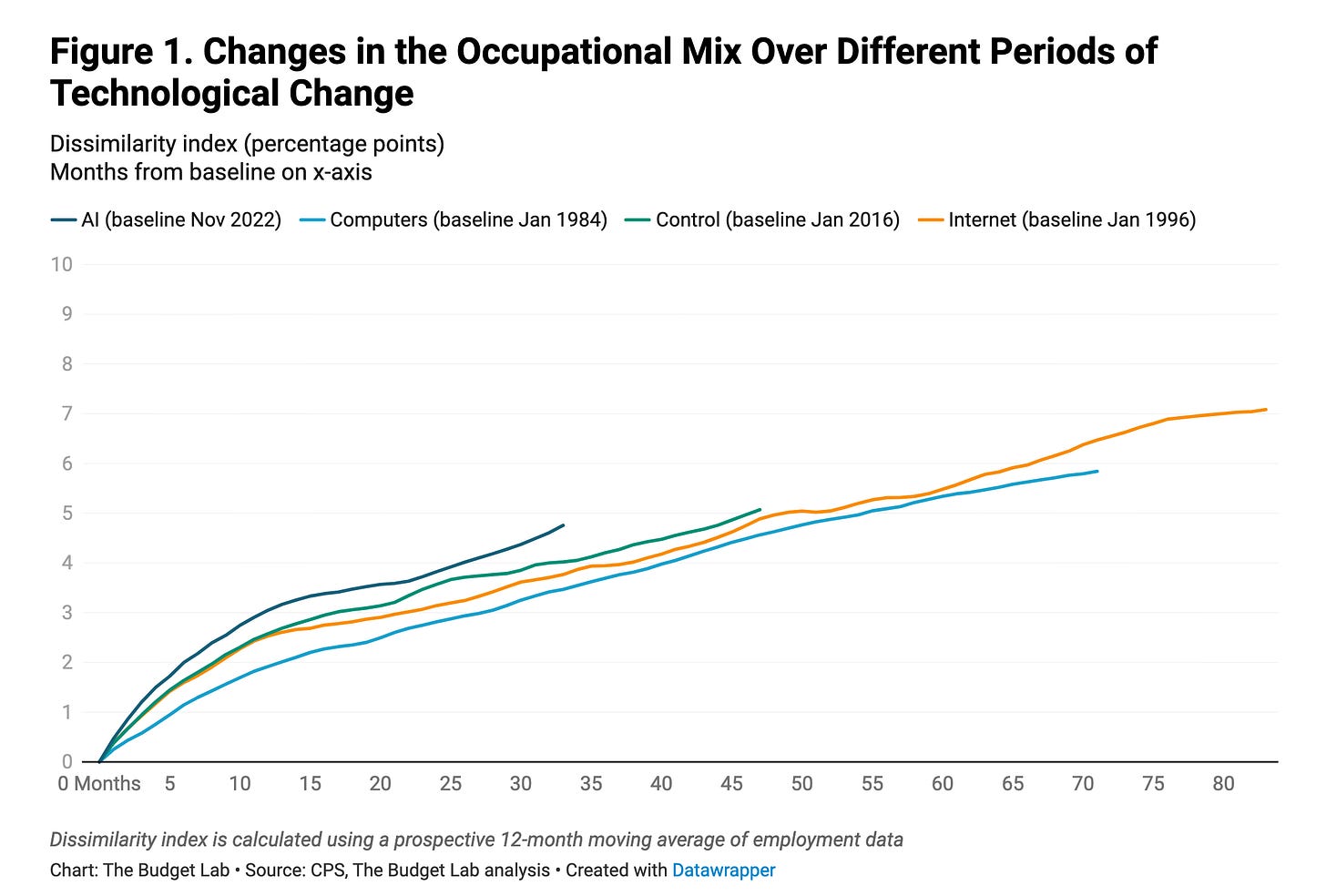

The headlines say AI will automate 80-90% of all work. When researchers examined actual labor market data since ChatGPT’s release, they found something different. Job transition rates have not increased compared to previous technological waves—computers in 1984, the internet in 1996 (Gimbel et al., 2025). The broader labor market shows no discernible disruption.

However, recent high-frequency payroll data reveals concentrated effects: early-career workers (ages 22-25) in AI-exposed occupations have experienced a 13% relative decline in employment, while more experienced workers in the same fields remain stable (Brynjolfsson et al., 2025). Critically, these declines concentrate in occupations where AI automates rather than augments human work. This shows that the degree to which work can be automated is the differentiator that has muted the impact for most of the workforce to this point.

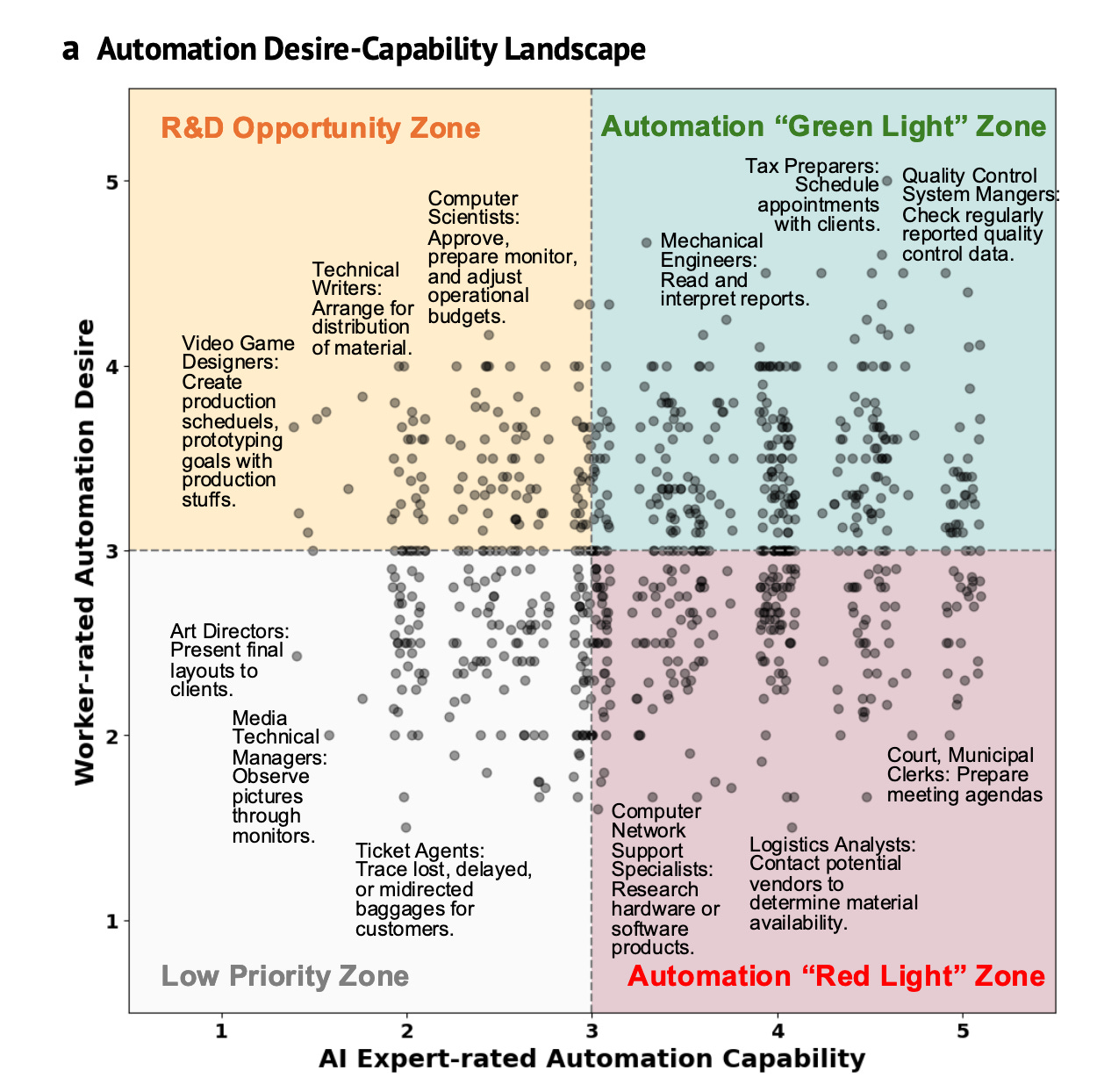

More revealing is recent research mapping worker preferences against AI capabilities. Shao et al. (2025) developed a “desire-capability landscape” using their Human Agency Scale (H1-H5), surveying 1,500 workers across 844 tasks in 104 occupations. Workers overwhelmingly desire collaborative partnerships with AI—level H3 “equal partnership” where humans and AI work together. Current development focuses heavily on full automation. There’s a massive underserved middle: an “R&D opportunity zone” where workers want augmentation, not replacement.

The four zones identified:

Green Light Zone: High capability, high desire for automation

Red Light Zone: High capability, low desire for automation

R&D Opportunity Zone: Moderate capability, high desire for collaboration

Low Priority Zone: Low capability, low desire

The expertise to develop these collaborative AI systems exists. It’s not where the tech industry has been looking.

Context Engineering and Communication Expertise

The latest focus in AI development is “context engineering.” As Andrej Karpathy describes it: “the delicate art and science of filling the context window with just the right information for the next step.” To make AI agents work in real workflows requires knowing what information to provide, how to structure reasoning processes, and how to ensure meaningful outcomes.

This is what communicators do. We curate situated knowledge—transforming information floods into precisely targeted briefing documents. We design communication processes—operationalizing invisible cognitive work into teachable frameworks. We orchestrate communicative action—ensuring outputs become outcomes through stakeholder engagement and iteration.

Communication as the original context engineering

From ancient mnemonic techniques to modern organizational learning systems, our field has provided just the right information for the next step. Consider how communication practices map directly onto AI context engineering challenges:

Memory (contextual information): We curate situated knowledge for projects and organizational learning. AI context engineering requires providing relevant contextual information to AI systems.

Thought (reasoning processes): We structure workflows using rhetorical analysis and genre strategies. AI context engineering requires designing reasoning processes and prompting protocols.

Agency (meaningful action): We orchestrate creative collaboration and stakeholder engagement. AI context engineering requires ensuring meaningful outcomes through human-in-loop workflows.

Technical teams building AI agents can engineer the systems. They’re discovering they need rhetorical context engineering to make those systems work for human collaboration.

Evidence from Research

Our field has built strong foundations in AI research. Getchell, Carradini, Cardon and colleagues published landmark early work. Carradini edited influential special issues in the Journal of Business and Technical Communication. Since 2022, across our flagship journals—Business and Professional Communication Quarterly, International Journal of Business Communication, and Journal of Business and Technical Communication—more than 40 articles have addressed AI literacy, ethical critique, and pedagogical applications.

What my research demonstrates is that communication isn’t just foundation—it’s frontier.

Case Study 1: Developing AI Literacies Through Self-Regulated Learning

In our recently published study, Anders & Dux Speltz, (2025), we examined how students develop generative AI literacies when given authentic problems and human-centered scaffolding. Working with students across two semesters, we documented their approaches to using AI for meaningful communication projects.

Key finding: When students engaged AI through self-regulated learning frameworks, they demonstrated sophisticated metacognitive strategies:

Planning phase: Students defined clear objectives and evaluation criteria before using AI, aligning tool use with personal and professional goals

Iteration phase: Students matched AI capabilities and limitations to their tasks, monitoring quality and relevance while maintaining creative vision

Evaluation phase: Students reviewed both project outcomes and process effectiveness

The study challenges assumptions about students automating their learning. Given the right experiences—authentic problems, appropriate scaffolding for both AI literacy and self-regulated learning—students take responsible and creative approaches. This work demonstrates how foundational literacies push toward frontier capabilities.

Case Study 2: AI-Facilitated Interdisciplinary Innovation

Research by Dell’Acqua et al. (2025) examining “AI as teammate” influenced our Applied AI Hackathon design. Their study compared four conditions: teams with AI, teams without AI, individuals with AI, individuals without AI. Teams with AI outperformed all others, but individuals with AI outperformed teams alone. The top 10% of solutions came overwhelmingly from teams with AI.

Their analysis: innovative solutions require multiple expertise domains. AI helped translate between technical and product perspectives, supplementing individual knowledge gaps and facilitating interdisciplinary collaboration.

We implemented these insights in our two-week intensive hackathon at Iowa State’s Student Innovation Center. Using facilitation materials structured around the double diamond process, we created cycles where:

Students collaborated using human methods first: brainstorming, discussion, analog techniques

AI augmented the process: helping refine, converge, or explore ideas at each stage

Human creativity remained central: AI translated human collaboration into the next innovation stage

Outcomes: Students produced outstanding work. More importantly, in just two weeks they showed significant growth in creative self-efficacy (confidence in achieving creative outcomes) and psychological capital (psychological resources for innovative work). These measures predict stronger creative performance, problem-solving, innovative behavior, and creative leadership.

“Our challenge resulted in a 12% increase in creative self-efficacy and an 8% gain in psychological capital – direct indicators of enhanced innovation capacity.”

Targeting the R&D zone—where AI enhances rather than replaces human capabilities—doesn’t just achieve better results. It actually strengthens core human capacities.

Strategic Research Opportunities

To understand where our field stands, I mapped nearly 40 recent articles from our flagship journals against the WORKBank desire-capability framework. The results show strong alignment with strategic priorities. The largest concentration—43% of research—falls in the R&D Opportunity Zone, addressing collaborative AI applications where workers desire partnership rather than automation. This includes work on AI literacy and ethics (pedagogical focus), organizational sensemaking and soft skills amplification (workplace focus), and maintaining construct validity in AI-assisted research methods.

We also have valuable critical work in the Red Light Zone (24%), examining contexts where workers resist automation despite technical capability—trust and authenticity concerns, ethical issues around data sovereignty and accessibility, and high-stakes communication requiring human judgment. The Green Light Zone (12%) focuses primarily on pedagogical applications where instructors can scaffold appropriate AI use. Notably, we have zero articles in the Low Priority Zone, demonstrating disciplinary wisdom in avoiding low-value applications.

Current research distribution:

R&D Opportunity Zone: 43% (balanced pedagogy/workplace, addressing collaboration complexity)

Red Light Zone: 24% (60% workplace, ethical resistance to automation)

Green Light Zone: 12% (80% pedagogical applications)

Low Priority Zone: 0% (appropriately avoided)

This strong foundation in the R&D zone positions us well, but four directions would further strengthen our leadership and focus on innovative impact:

1. Workplace Implementation Research

Document how communication professionals deploy AI and redesign workflows. Case studies of organizations using AI for routine communication tasks, with analysis of where professionals intervene in system design.

2. Rhetorical Context Engineering Partnerships

Partner with technical teams building AI agents. We analyze communication situations, decompose workflows, identify contextual information that makes AI effective for collaborative tasks. Example: Microsoft’s credit card annotation project required analyzing who reviews what information in what order before building effective AI assistance.

3. AI Skills for Practitioners

Translate our literacy research into professional development. The workplace knows AI should help but struggles with implementation. We can teach professionals how to collaborate with AI for the majority of desirable and feasible tasks identified in the WORKBank research.

4. Communication-Specific Desire-Capability Mapping

Replicate Shao et al.’s approach specifically for communication tasks and roles. Create detailed maps of where our majors work and where the greatest R&D opportunities exist for business and professional communication applications.

At our new AI Innovation Studio in the Student Innovation Center at Iowa State University, we’re committed to this vision: transforming students from AI consumers to innovative creators who build tools that augment human capabilities. We’re working across no-code to advanced code applications, connecting student innovation to industry challenges.

Why This Matters

Every technological wave amplifies sophisticated communication needs. Team communication platforms didn’t eliminate meetings—they made communication visibility and multicommunication management more critical (Anders 2016). AI won’t eliminate business communication expertise. It makes rhetorical context engineering, workflow design, and human-centered collaboration more essential.

We’re positioned to architect AI innovation, not just adapt to it. Our decades of work on rhetorical analysis, communication processes, and organizational context are precisely what’s needed to exploit that underserved R&D opportunity zone—to build AI systems that partner with humans rather than replace them.

References

Anders, A. (2016). Team Communication Platforms and Emergent Social Collaboration Practices. International Journal of Business Communication, 53(2), 224–261. https://doi.org/10.1177/2329488415627273

Anders, A. D., & Dux Speltz, E. (2025). Developing generative AI literacies through self-regulated learning: A human-centered approach. Computers and Education: Artificial Intelligence, 9, 100482. https://doi.org/10.1016/j.caeai.2025.100482

Brynjolfsson, E., Chandar, B., & Chen, R. (2025, August 26). Canaries in the Coal Mine? Six Facts about the Recent Employment Effects of Artificial Intelligence. Working paper. https://digitaleconomy.stanford.edu/wp-content/uploads/2025/08/Canaries_BrynjolfssonChandarChen.pdf

Dell’Acqua, F., Ayoubi, C., Lifshitz-Assaf, H., Sadun, R., Mollick, E. R., Mollick, L., Han, Y., Goldman, J., Nair, H., Taub, S., & Lakhani, K. R. (2025). The Cybernetic Teammate: A Field Experiment on Generative AI Reshaping Teamwork and Expertise. SSRN. https://doi.org/10.2139/ssrn.5188231

Gimbel, M., Kinder, M., & Lee, M. (2025, October 1). Evaluating the Impact of AI on the Labor Market: Current State of Affairs. The Budget Lab at Yale. https://budgetlab.yale.edu/research/evaluating-impact-ai-labor-market-current-state-affairs

Shao, Y., Zope, H., Jiang, Y., Pei, J., Nguyen, D., Brynjolfsson, E., & Yang, D. (2025). Future of Work with AI Agents: Auditing Automation and Augmentation Potential across the U.S. Workforce (arXiv:2506.06576). arXiv. https://arxiv.org/abs/2506.06576

Didn't expect this take on the subject; your argument for comunication leading collaborative AI is compelling, yet how much does architecture define true partnership?